Be Very Careful with AI Agents!

AI agents—software that can take actions on your behalf using artificial intelligence—are having a moment. The appeal is obvious: imagine a robot butler that triages your inbox, manages your calendar, and handles tedious tasks while you focus on more important work.

That’s the promise driving the recent surge in popularity of OpenClaw (formerly known as Clawdbot and Moltbot), which is now all the rage in tech circles. Token Security found that at least one person is using it at nearly a quarter of its enterprise customers, mostly running from personal accounts. That’s a shadow IT nightmare—employees connecting work email and Slack to an unsanctioned tool that IT doesn’t know about and can’t monitor. Whether you’re an individual tempted by OpenClaw’s promise or a manager wondering what your users are up to, you need to understand the risks these AI agents pose.

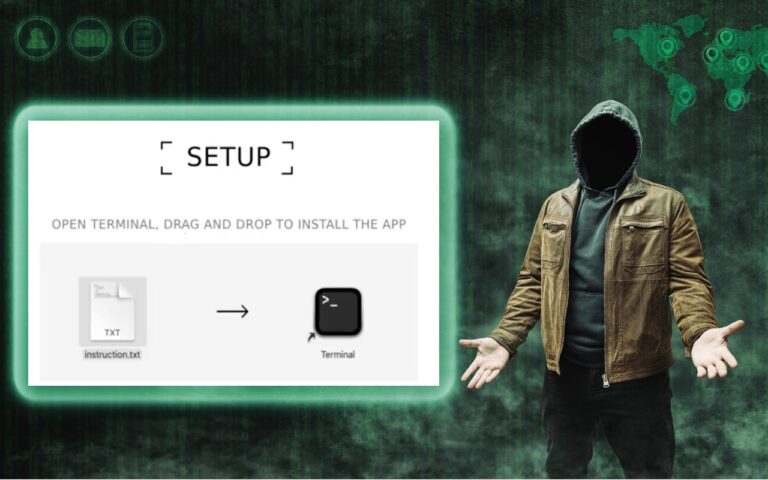

OpenClaw is an AI agent built around “skills”—installable plugins that let it integrate with your messaging apps, email, calendar, and more. You communicate with OpenClaw via Messages, Slack, WhatsApp, and similar apps. Because it’s open source, you’ll need to provide your own API keys for AI services like OpenAI or Anthropic, which means ongoing costs that can add up quickly—people have reported spending $10–$25 per day.

The more serious problem? Security researchers have discovered serious vulnerabilities, including misconfigured instances exposed to the internet that leak credentials, API keys, and private messages, and a supply chain vulnerability where malicious skills uploaded to the ClawdHub library can execute arbitrary commands on users’ systems. Even beyond specific bugs, OpenClaw’s fundamental design encourages users to grant broad access to sensitive accounts.

Why AI Agents Are Risky

Security concerns aren’t unique to OpenClaw—they apply to any AI agent that acts on a user’s behalf. Here’s what’s at stake:

- Credential exposure: For an AI agent to send emails, manage your calendar, or post to Slack, it needs your authentication tokens or login credentials. If the agent software stores these credentials insecurely, or an attacker gains control, they could be exposed.

- Prompt injection: AI agents work by following instructions, but they can’t easily distinguish between prompts and data in the content they use. A class of attacks called “prompt injections” trick AI systems by hiding malicious content in emails, websites, or documents that will be processed. An attacker could embed instructions in an email that would cause your agent to search for and forward email messages containing passwords or financial data, follow links to malware sites, or take other harmful actions. There is currently no foolproof defense against this class of attack.

- Data exfiltration: An AI agent with access to your email and your computer’s filesystem could be manipulated to extract information from elsewhere on your computer—financial data, customer lists, or personal details—and send it to an attacker.

- Unvetted extensions: OpenClaw and similar AI agents let users install “skills” or plugins to extend functionality. Libraries that allow users to share custom skills often have minimal or no security vetting, making it easy for attackers to submit poisoned skills. Installing such a skill could grant malicious code access to everything your agent can touch.

- Exposed control interfaces: Security researchers found OpenClaw control servers exposed on the Internet, potentially leaking API keys, VPN credentials, and conversation histories. This risk is unique to OpenClaw at the moment, but future AI agents may suffer from similar vulnerabilities, particularly as they’re adopted by less technically savvy users.

How to Reduce Your Risk

We’ll come right out and say it: we strongly recommend against installing OpenClaw or other AI agents on your Mac. In a year or so, Apple may have updated Siri to provide many of these capabilities with significantly stronger privacy and security. But for now, just say no.

If you decide to use AI agents despite these risks, here are practical steps to protect yourself:

- Use dedicated accounts: When possible, create separate accounts specifically for agent use rather than linking your primary personal or work accounts.

- Limit permissions: Grant the agent access only to accounts it absolutely needs. If you only want help with your calendar, don’t also connect your email and messaging services.

- Avoid connecting sensitive services: Never connect anything involving money, healthcare, or confidential business information. The liability is too high if something goes wrong.

- Review agent actions: If the platform offers logs or activity feeds, check them regularly. Look for unexpected messages sent, files accessed, or connections made.

- Vet extensions carefully: Don’t install skills or plugins from unknown sources, and even with known libraries, look for evidence of others using and reviewing the skills. Treat skills like any other software you’d install on your computer.

- Keep software updated: Security patches for OpenClaw and similar tools address known vulnerabilities. If you’re running an agent, keep it up to date.

- Run agents in isolated environments: Technical users should consider running agents in sandboxed environments or virtual machines to limit potential damage.

If you run a business, you should assume that some employees have already installed OpenClaw or will soon, and may have connected their work email and Slack accounts without realizing the associated risks. Here’s what you can do:

- Educate before it’s a problem: Proactively explain the risks to employees. People are more receptive before they’ve already invested time setting something up.

- Update acceptable use policies: Make clear that connecting work accounts to unsanctioned AI agents is prohibited, and explain why.

- Offer sanctioned alternatives: If employees want AI assistance, point them toward safer options that don’t require handing over credentials to sensitive accounts.

What About Claude Cowork and OpenAI Codex?

Not all AI agent platforms carry the same level of risk. Anthropic’s Claude Cowork and OpenAI’s Codex take a different architectural approach from OpenClaw. Rather than requesting authentication tokens for your email, messaging, and other personal services, they operate within their own controlled, sandboxed environments. These systems work primarily with files, code, and data you explicitly place into their workspace, which substantially limits the fallout from an attacker gaining some level of control.

This containment approach reduces risk, but does not eliminate it. Prompt injection remains a concern whenever an AI system processes untrusted content, even inside a sandbox. An AI agent analyzing a malicious document could still be manipulated into taking unintended actions within its allowed environment. Similarly, any code generated by these systems—particularly code that touches the network or executes system commands—should be reviewed carefully to make sure it hasn’t been compromised by prompt injection.

The key distinction is scope. Claude Cowork and Codex are designed to operate within a defined workspace, whereas tools like OpenClaw require standing access to your most sensitive accounts. From a security perspective, a compromised sandbox is a recoverable incident; a compromised email or messaging account may not be.

The Bottom Line

AI agents promise a lot and may provide genuine convenience, but at a cost beyond just paying for API tokens. Before you or anyone in your organization connects an AI agent to sensitive accounts, consider: What’s the worst that could happen if this system were compromised by an attacker? If the answer involves passwords being stolen, private email being exposed, or photos being posted to social media without your knowledge, proceed with extreme caution. If you can imagine a way financial accounts could be accessed or business data stolen, don’t proceed at all.

(Featured image by iStock.com/Thinkhubstudio)

Social Media: AI agents like OpenClaw promise to automate tedious tasks, but recent security vulnerabilities highlight the dangers of using them. Learn the risks and how to protect yourself—and your organization—if you choose to use an agent.